lz-string: JavaScript compression, fast!

Goal

lz-string was designed to fulfill the need of storing large amounts of data in localStorage, specifically on mobile devices. localStorage being usually limited to 5MB, all you can compress is that much more data you can store.

You don't care about the blah-blah?

What about other libraries?

All I could find was:

- some LZW implementations which gives you back arrays of numbers (terribly inefficient to store as tokens take 64bits) and don't support any character above 255.

- some other LZW implementations which gives you back a string (less terribly inefficient to store but still, all tokens take 16 bits) and don't support any character above 255.

- an LZMA implementation that is asynchronous and very slow - but hey, it's LZMA, not the implementation that is slow.

- a GZip implementation not really meant for browsers but meant for node.js, which weighted 70kb (with deflate.js and crc32.js on which it depends).

- Working on mobile I needed something fast.

- Working with Strings gathered from outside my website, I needed something that can take any kind of string as an input, including any UTF characters above 255.

- The library not taking 70kb was a definitive plus.

- Something that produces strings as compact as possible to store in localStorage.

Is this LZ-based?

I started out from an LZW implementation (no more patents on that), which is very simple. What I did was:

- localStorage can only contain JavaScript strings. Strings in JavaScript are stored internally in UTF-16, meaning every character weight 16 bits. I modified the implementation to work with a 16bit-wide token space.

- I had to remove the default dictionary initialization, totally useless on a 16bit-wide token space.

- I initialize the dictionary with three tokens:

- An entry that produces a 16-bit token.

- An entry that produces an 8-bit token, because most of what I will store is in the iso-latin-1 space, meaning tokens below 256.

- An entry that mark the end of the stream.

- The output is processed by a bit stream that stores effectively 16 bits per character in the output string.

- Each token is stored with just as many bits that are needed according to the size of the dictionary. Hence, the first token takes 2 bits, the second to 7th three bits, etc....

How does it work

Very simple.

- First, download the file lz-string.js from the GitHub repository.

- Second, import it in the page where you want to use it: <script language="javascript" src="lz-string.js"></script>

- At last, call compress and decompress on the LZString object:

var string = "This is my compression test."; alert("Size of sample is: " + string.length); var compressed = LZString.compress(string); alert("Size of compressed sample is: " + compressed.length); string = LZString.decompress(compressed); alert("Sample is: " + string);

Browser support & Stability

No extensive and exhaustive testing has been done, but only basic JavaScript types are used and nothing too fancy either. So it should work on pretty much any JavaScript engine. It has been tested on IE6 and up, Chrome, Firefox, Opera (pre and post blink), Safari, iOS and the default browser on Android along with Chrome and Firefox. For desktop Chrome, Firefox and Safari, it was originally tested on all current versions (as of may 2013) and it's been working until then.Of course, if you use the compressToUint8Array, you need an engine that support unit8arrays and the list of engine is thus greatly reduced. You can find the compatible browsers on caniuse.com.

It also works on node.js.

As far as stability goes, extensive testing has been done on this program, on an iPhone, Desktop Chrome, Desktop Firefox and Android's browser. So far, so good. Additionally, it would look as if quite aplenty of other people are using it, so I assume it works for them as well. In fact, the one time where I introduced a new functionality that was bugy in the library (I'm more careful now, and unit tests were added since then) it was spotted very quickly. I take this as a good sign that this library is widely in use, and hence, that it is stable.

Performance

For performace comparison, I use LZMA level 1 as a comparison point.

- For strings smaller than 750 characters, this program is 10x faster than LZMA level 1. It produces smaller output.

- For strings smaller than 100 000 characters, this program is 10x faster than LZMA level 1. It produces bigger output.

- For strings bigger than 750 000 characters, this program is slower than LZMA level 1. It produces bigger output.

Come and have a look for yourself at the demo. Also another page for developers: Performance experiments, which try to optimize the whole things to hunt for CPU cycles. Already some results.

What can I do with the output produced from this library?

Well, this lib produces stuff that isn't really a string. By using all 16 bits of the UTF-16 bitspace, those strings aren't exactly valid UTF-16. By version 1.3.0, I added two helper encoders to produce stuff that we can do something with:

- compress produces invalid UTF-16 strings. Those can be stored in localStorage only on webkit browsers (Tested on Android, Chrome, Safari). Can be decompressed with decompress

- compressToUTF16 produces "valid" UTF-16 strings in the sense that all browsers can store them safely. So they can be stored in localStorage on all browsers tested (IE9-10, Firefox, Android, Chrome, Safari). Can be decompressed with decompressFromUTF16. This works by using only 15bits of storage per character. The strings produced are therefore 6.66% bigger than those produced by compress

- compressToBase64 produces ASCII UTF-16 strings representing the original string encoded in Base64. Can be decompressed with decompressFromBase64. This works by using only 6bits of storage per character. The strings produced are therefore 166% bigger than those produced by compress. It can still reduce significantly some JSON compressed objects.

- compressToEncodedURIComponent produces ASCII strings representing the original string encoded in Base64 with a few tweaks to make these URI safe. Hence, you can send them to the server without thinking about URL encoding them. This saves bandwidth and CPU. These strings can be decompressed with decompressFromEncodedURIComponent. See the bullet point above for considerations about size.

- compressToUint8Array produces an uint8Array. Can be decompressed with decompressFromUint8Array. Works starting with version 1.3.4.

Only JavaScript? Porting to other languages

NOTE: Go to GitHub to see the up-to-date list (scroll down the page).LZ-String was originally meant for localStorage so it works only in JavaScript. Others have ported the algorithm to other languages:

- Diogo Duailibe did an implementation in Java:https://github.com/diogoduailibe/lzstring4j

- Another implementation in Java, with base64 support and better performances by rufushuang https://github.com/rufushuang/lz-string4java

- Jawa-the-Hutt did an implementation in C#:https://github.com/jawa-the-hutt/lz-string-csharp

- kreudom did another implementation in C#, more up to date:https://github.com/kreudom/lz-string-csharp

- nullpunkt released a php version: https://github.com/nullpunkt/lz-string-php

- eduardtomasek did an implementation in python 3: https://github.com/eduardtomasek/lz-string-python

- I helped a friend to write a Go implementation of the decompression algorithm: https://github.com/pieroxy/lz-string-go

- Here is an Elixir version, by Michael Shapiro: https://github.com/koudelka/elixir-lz-string

- Here is a C++/Qt version, by AmiArt: https://github.com/AmiArt/qt-lzstring

- Here is a VB.NET version, by gsemac: https://github.com/gsemac/lz-string-vb

- Here is a Ruby version, by Altivi: https://github.com/Altivi/lz_string

If you want to port the library to another language, here are some tips:

- Port the compress and/or decompress methods from the version 1.0.2. All versions are binary-compatible and further versions just incorporate ugly optimizations for JavaScript, so you shouldn't bother. The version 1.0.2 can be found in the reference directory of the GitHub repo

- Port the (de)compressToBase64 and/or (de)compressToBaseUTF16 from the latest version depending on your needs.

- Be aware that compress and decompress work on invalit UTF characters in conjunction with (de)compressToBase64 and/or (de)compressToBaseUTF16. You may want to transfer stuff from byte arrays instead.

- To test/debug your implementation, just start with simple strings like "ABC". If the results of the compression (or decompression) aren't the same as the JS version, just go step by step in the JS version and then step by step into yours. Spotting problems should be really easy and straightforward. Repeat with strings slightly more complex and with repeating patterns: "ABCABC", "AAAA", etc.

- Post a message on the blog so I can link to your implementation.

Feedback

To get in touch, the simplest is to leave me a comment on the blog. For issues, you may go to the GitHub project.

How does it look? How can I inspect localStorage with this?

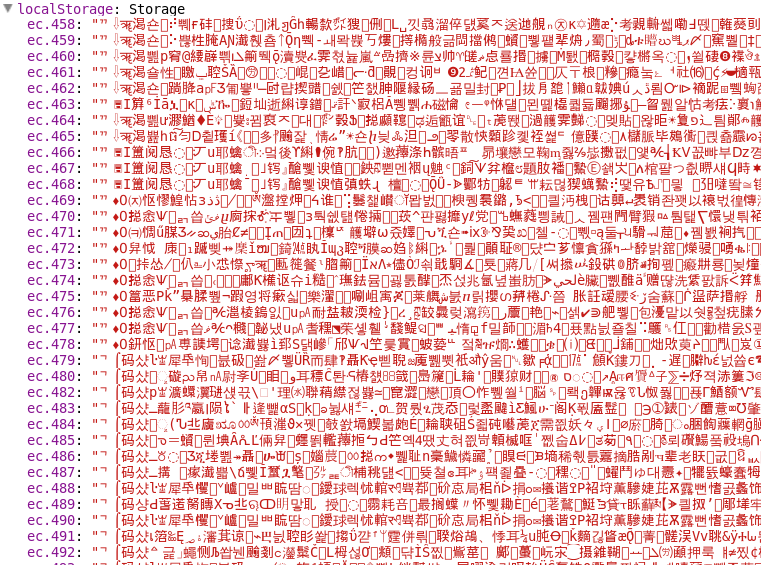

So, this program generates strings that actually contain binary data. What's the look of them? Well, here is a glimpse of my localStorage:

This was first meant to be a joke, but then Kris Erickson released a Chrome Extension to be able to view the decompressed version directly in the inspector tool in Chrome. Many thanks. The GitHub.

License

Ahhhh, here we go. This software is copyrighted to Pieroxy (2013) and all versions are currently licensed under The MIT license.

Changelog

- More recent changes can be found on GitHub.

- Feb 18, 2015: version 1.3.9 includes a fix for decompressFromUint8Array on Safari/Mac.

- Feb 15, 2015: version 1.3.8 includes small performance improvements.

- Jan 16, 2015: version 1.3.7 is a minor release, fixing bower.json.

- Dec 18, 2014: version 1.3.6 is a bugfix on compressToEncodedURIComponent and decompressFromEncodedURIComponent.

- Nov 30, 2014: version 1.3.5 has been pushed. It allows compression to produce URI safe strings (ie: no need to URL encode them) through the method compressToEncodedURIComponent.

- July 29, 2014: version 1.3.4 has been pushed. It allows compression to produce uint8array instead of Strings.

- Some things happened in the meantime, giving birth to versions 1.3.1, 1.3.2 and 1.3.3. Version 1.3.3 was promoted the winner later on. And I forgot about the changelog. The gory details are on GitHub.

- July 17, 2013: version 1.3.0 now stable.

- June 12, 2013: version 1.3.0-rc1 just released. Introduced two new methods: compressToUTF16 and decompressFromUTF16. These allow lz-string to produce something that you can store in localStorage on IE and Firefox.

- June 12, 2013: version 1.2.0-rc1 is now stable and promoted to the 1.2.0 version number.

- May 27, 2013: version 1.1.0-rc1 is now stable and promoted to the 1.1.0 version number. Two files can be downloaded: lz-string-1.1.0.js and lz-string-1.1.0-min.js, its minified evil twin weighting a mere 3455 bytes.

- May 27, 2013: version 1.2.0: Introduced two new methods: compressToBase64 and decompressFromBase64. These allow lz-string to produce something that you can send through the network.

- May 27, 2013: Released base64-string-v1.0.0.js.

- May 23, 2013: version 1.1.0-rc1 Optimized implementation: 10-20% faster compression, twice as fast decompression on most browsers (no change on Chrome)

- May 19, 2013: version 1.0.1 Thanks to jeff-mccoy, a fix for Chrome on Mac throwing an error because of the use of a variable not declared. JavaScript is so great!

- May 10, 2013: version 1.0 Added license

- May 08, 2013: version 1.0 First release

Credits

I'd like to thank the following people, without which LZ-String wouldn't be in the state it's in right now.

- Carl Ansley for his bugfixes.

- Samuel Rouse for various performance improvements.

- lsching17 for the uint8array implementation.

- Jonas Hermsmeier for the node.js support.

- J0eCool for some robustness checking in case of a truncated input.

- Olli Etuaho for setting up unit tests and various improvements.

- zenyr for various optimizations.